(Throughout this portfolio, clickable links are indicated by underlined text. Feel free to explore additional resources and project sections by clicking on the underlined words.)

Word Count: 5500 words

Project Brief & Role

For our final year, we were tasked with completing a work placement related to our disciplines. As a Game Design & Development student with a strong passion for game production, I was thrilled to secure the role of Game Producer and Lead Game Developer for Tomebound working three days a week with RaRa Games; my live client from my second year, where I previously contributed as a Game Developer.

Sustainable Development

Reflecting on my role in this project, I've become more mindful of my own consumer behaviour, striving to balance needs and wants while prioritising sustainable choices. This aligns closely with Sustainable Development Goal 12 (United Nations, 2024), which promotes responsible consumption and production. I made it a priority to encourage the team to adopt these principles, ensuring sustainability is integrated throughout our development process.

In practice, we’ve focused on optimising code to reduce computational load and extending asset reusability across levels, while minimising resource waste. The ethical implications of our work, including designing an accessible UI and using efficient technologies, have been carefully considered. We’ve also ensured our content respects diverse cultures and perspectives, reinforcing the importance of responsible consumption in game development.

Navigation:

01

Production

The role of a Game Producer bleeds into many different areas, but at its core, it is to oversee the development of a video game - ensuring the project is completed efficiently, to a high standard, on time and within budget. When I took on the role of Producer for Tomebound, one of the immediate challenges was the lack of an established production pipeline or roadmap. Much of the prototyping had been done individually, with little to no communication between team members. Consequently, my first task was establishing a production pipeline and determining the best way to manage the team and track progress.

The nature of game development itself is very iterative and requires flexibility and adaptability to any changes in creative direction or roadblocks that may occur; hence, when deciding which framework to follow, both needed to be taken into consideration. I felt in this case, utilising an agile methodology framework in the form of Scrumban was the best option (Atlassian, 2024). This combines the framework of Scrum, in which project work is broken down and completed in short 1-4 week long 'sprints' allowing for efficient time management (Scrum.org, 2024), and Kanban, which uses a project board like Trello to visually manage task progress and allocation (Martins, 2024).

To set this framework in motion, I created a Trello board, setting out our sprint deadlines and targets in the form of five two-week long sprints. This allowed me to keep team members more focused on smaller, more manageable tasks week to week, which would be assigned based on the current iterative needs of the game - this resulted in quicker feature completion, as well as earlier identification of issues in workflow for team members, enabling me to help them problem solve or move them onto a more fitting task.

The board itself is split into 9 columns:

-

Sprint Goals - Detailing sprint dates and milestones to be ticked off as completed.

-

To Do/Backlog - All tasks remaining to be completed.

-

Bugs - Outstanding bugs identified in game.

-

Current Sprint - Tasks to be completed within current sprint.

-

In Progress - Tasks currently being worked on, to be moved into this column from current sprint once work has begun.

-

Next Up - Tasks upcoming in next sprint.

-

Completed - All completed task cards, including bug fixes.

-

Blocked - For tasks which have been started but are pending the completion of a different feature/system.

-

On Hold - Features that are on hold until base tasks with higher priority completed.

The most important task was identifying the current bugs and backlog; this required me to spend time rigorously testing the game and all its mechanics, and reviewing all assets and scripts. The board was kept up to date by myself and the Creative Director, ensuring an up-to-date view of the entire project was available at all times, with team members updating only their assigned cards with their progress as completed.

Tomebound Trello board (click to magnify, move mouse to navigate)

Once I had completed the Kanban board, I set up the communication and Scrum side of the Scrumban framework.

I first began by refreshing the existing Discord server with more relevant communication channels and creating a useful information channel containing quick reference information and links for team members to have easy access. Within this, I created several forms and documents, including a feature reporting form, completed every week, giving a clear and concise

view of the progress made that week to be reviewed by myself and the Creative Director in our meeting. I also created a bug reporting form to allow for efficient bug reporting with all the required information compiled in a spreadsheet. Beyond this, I made a number of other resources including an updated keybinds chart for the game itself, a system overview and a team availability chart, as well as linking the project Google Drive, Trello board and link to book a meeting with me. This page was well received by the team, with feedback stating it reduced the overwhelm of remembering so much important information.

Onboarding

Once our team of 10 was confirmed, I set up an initial meeting setting out the expectations and timeline of the project work over the 10 weeks, including an overview of how Scrumban fits within our workflows.

As the team largely consisted of 3D Artists/Game Designers who had little, if any, Unity knowledge, I separately held meetings with all these team members to onboard them and teach them the basics of Unity, including how to set up and use Unity Version Control. I also wrote a quick guide for the team to reference if needed.

Meetings

During our two-week sprints, I held weekly meetings, with every second meeting marking the end of a sprint. As a remote studio, staying informed about progress and issues outside of one-on-one communications was crucial; hence, I wanted to ensure the team had regular opportunities to share updates and give peer feedback.

In preparation for meetings, team members were required to upload their feature reporting form and update their Trello cards. Initially, compliance was low due to some members forgetting to submit forms on time, but addressing these issues quickly improved the situation. During sprint meetings, each member presented their completed work, discussed issues, and received feedback from myself and the Creative Director, as well as from peers. At the end of each sprint, I assigned new tasks or specified changes needed and provided an update on the project's progress.

Throughout this time, I supported the team on an ad hoc basis, as a core part of my role as Producer is to ensure my team can do their jobs without any roadblocks. As I am also the Lead Developer, this allowed me to quickly solve any technical issues, whether related to bugs, or changes to the functionality of mechanics based on feedback, which was particularly relevant in the realm of level design. During our time together, I held 14 individual meetings with team members to provide advice and assistance with their work, in addition to many unscheduled conversations both online and in person and made it a priority to respond promptly to any queries to acknowledge their receipt.

Stakeholder liaison

As the Game Producer and Lead Developer, my role involved extensive communication with the key stakeholder - the Creative Director, who is also the founder of the studio and funds the project. This relationship was crucial in guiding the development process and ensuring that all technical and creative decisions were approved and aligned with the overall vision for the game.

Over these 10 weeks, in addition to the weekly sprint meetings I conducted, I also held weekly meetings with the Creative Director to provide feedback on progress, assess feature progress and discuss potential changes to the project, timelines and production. These meetings were instrumental in shaping the wider progress and direction of the game, allowing for bigger-picture thinking compared to the focused tasks at the sprint level.

Documentation & Roadmap

Within my role, I also set about creating the core structure of a live game design document, to have a single reference document containing all the high-level information about Tomebound, from its core narrative and roadmap to a review and guide of all development systems within the game, i.e. UI, Audio, Contextual Interactions etc. At this time, I have not added any specific information regarding the systems in Unity as these are in the process of being refactored to work more efficiently together; once this has been completed, the document will be updated. As part of this process, I have also worked on a roadmap (shown right) for the release of the game based on current plans and expected future team size.

The base game design document can be viewed here.

.png)

Game System Overview

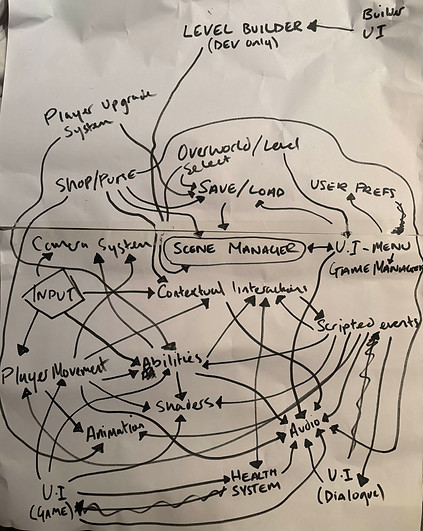

As part of refactoring the game scripts, assets, and systems, we needed a document that would show how the systems work together within the game's structure.

The Game Director began drawing up the basis of this system (shown left), and together, we fleshed out which systems should work together and in which direction systems should exchange information. Once completed, I took this plan and digitised it in the form of a Miro board for easier consumption, as seen below.

The board can be seen directly on Miro here.

Before (above) and after (below) of Game Ecosystem

Website and Steam Page

Another area I worked on was the website and Steam page. Whilst we are still very early in development, we are approaching the period in which we should be announcing Tomebound, and hence, having at least a basic studio website and a Steam page to begin attracting players and wishlists is important. The completed website can be viewed here.

As we are still awaiting more polished in-game videos and renders, I have used placeholders for library and capsule images. However, having completed the game descriptions, FAQs and about section with tailor-made icons and logo from the Creative Director, there should only be minor tweaks needed before the game announcement goes live. The Steam page was the very last task I completed during this placement period, and it really felt like it brought the team's hard work around full circle. Although it is not live yet, seeing Tomebound as a Steam page was very exciting.

Communication

Throughout my experience as a producer, my focus has been on clear and appropriate communication. Whilst this is an important trait within any team, strong cross-functional communication is vital in a role such as Producer that steers the success of a project. Managing a team of 10 allowed me to improve my communication skills from a leadership standpoint and enabled me to practice adapting my communication style based on the person and setting, consistently seeking feedback to ensure I was providing what each team member needed individually. I wanted to ensure throughout the process that any communications from me were as clear as possible, whether in verbal or written form - and that if there was anything I thought may need clarification, I explained and sign-posted this clearly at the initial point of exchange to save any confusion or time wasted for team members awaiting answers. It was also important for me that the team could trust and approach me as and when needed, without fear of judgment, creating an environment of mutual respect and trust, which enabled us to work more efficiently as a team and problem-solve more quickly.

02

Development

I also held the role of Lead Game Developer for Tomebound, overseeing the game's development, implementing new systems, and fixing many bugs. During the 10 weeks, I checked in over 110 changes to the Unity Version Control system, ranging from scene correction and bug fixes to building new systems - with documentation now being held within the Unity system as README files. In the following sections, I detail a few tasks completed during our five sprints.

All changesets checked in during placement period

Bug Fixing

On taking on this role, Tomebound was not in a playable state. Many individual elements were completed; however, these had not been adapted or implemented to work together as a game. Hence, the first sprint had me combining the basic functionality of the game, while adding all known bugs to the Trello board - to be handled according to the severity of the impact on gameplay. Bugs identified by the team were reported via the bug reporting form, which I reviewed upon submission. Detailed below are a few notable bugs that affected gameplay.

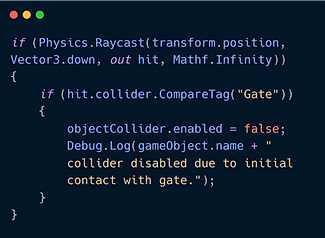

Players unable to travel between floors

After conducting some tests, it became clear that the issue stemmed from a tile in front of the exit gate, obstructing the raycast from the gate collider. To address this, I developed a new script for the tile prefab that checks for any objects tagged as 'gate' and disables the tile's box collider when in contact. Initially, I used an OverlapBox (Unity Technologies, 2024a) to detect the gate object, which worked as a quick fix. However, a few weeks later, we encountered a bug where movable objects would fall through tiles. It turned out that the OverlapBox was incorrectly disabling the box colliders of nearby tiles, causing the issue.

To resolve this, I revised the logic to utilise a downward Physics.Raycast (Unity Technologies, 2019), which proved more efficient and precise. This change ensured that only the tile directly above the gate collider had their box collider disabled, preventing unintended behaviour.

Book floating out of reach of player

This bug would occur when one player grabbed and then released the book, causing the object to rise incorrectly and float out of reach. I implemented a height management system in the Action_Grab script to solve this. When the book was grabbed, I set its height to a fixed value, ensuring it remained at an appropriate level between players. This was achieved using a coroutine to smoothly adjust the book’s position, preventing it from floating upwards during interactions when already being held. The system also accounted for when the book was released, ensuring it stayed in place and didn't display unwanted behaviour. This solution effectively eliminated the floating issue, ensuring the book remained within reach of both players.

Before - Book floating out of reach

After - Book responding correctly to grab

Diagonal movement

As Tomebound is a game that uses grid-based movement, it is essential that movement only occurs on the X and Z axes. However, while testing the levels, it became evident that the system currently in place doesn't account for preventing diagonal movement, which in turn affects player movement and abilities.

To prevent diagonal movement, I added a check in the MoveObject method. When any movement input is received, the script checks if the x and z values of the direction vector are non-zero (Unity Technologies, n.d.), indicating a diagonal movement. If this happens, the movement is immediately stopped with a warning logged, ensuring no movement occurs along the diagonal. Additionally, the script adjusts the movement so that only one axis - either x or z - can be active at a time. This prevents simultaneous movement in both directions resulting in diagonal movement and ensures that the object only moves in the four cardinal directions of north, south, east, or west.

Before - Diagonal movement present

After - Movement on X and Z axis only

Movement blocked after drone use

When this bug was initially raised as an issue, it was unclear why movement was blocked on what appeared to be random tiles, with the bug being difficult to recreate, and led to considerable frustration during playtesting. Upon further testing and inspection of the scene view and inspector whilst in game mode, it became apparent that the drones, once used, weren’t properly deactivated or destroyed. This caused their colliders to remain on the grid, creating invisible obstructions that blocked movement through the affected tiles.

Initially, I tried addressing the problem by disabling the drone’s collider at the end of the ability and assumed that removing its physical presence would resolve the issue. However, I soon discovered that while the collider was inactive, the drone object remained in the scene, continuing to interact with other systems and causing unexpected behaviour.

After further investigation, I realised the root cause was that the drone object clone was not fully removed from the game. I added two steps to the EndAbilityMode method to fix this in the Action_Abilities script. First, I disabled all renderers associated with the drone, ensuring it was visually removed from the scene. Then, I deactivated the drone object to stop its interactions and followed this by explicitly destroying it with a Destroy(localDrones) call. This resolved the issue, as drones were properly cleaned up after use.

Raycast blocked by drone after deactivation

Drone successfully deactivated and destroyed

Feature Implementation

Fireflies

Towards the end of the first sprint, to add some movement to the scene, I used a VFX graph (Unity Technologies, 2024b) (shown right) to create the effect of fireflies floating around the scene. The goal was to have fireflies that constantly spawn and fade, adding a sense of realism and atmosphere to the environment.

I set up a spawn system that constantly emits particles, ensuring the fireflies appear and disappear in a loop. I then added a volume-based emitter, with a shape set to a cone, to distribute the particles naturally across the area. For the movement, I added a slight turbulence force to make the fireflies float randomly, which gave them a more organic feel. I also adjusted the colour and size of the particles to make them glow, enhancing the effect with a soft orange hue to match the fire-themed aesthetic of the current game area.

One issue I ran into was that the fireflies would spawn too quickly, causing them to appear in clusters rather than spreading out across the scene. To fix this, I reduced the spawn rate, added a randomised lifetime for each particle, and edited the size of the particles. This not only made the fireflies appear more spread out and varied but also gave the effect a more natural, erratic behaviour.

Initial attempt - Single burst, clustered & too large

Final version

In the final version, the fireflies continuously spawn, float around, and fade in and out adding some life to the scene without being too overbearing.

In game comparison with (left) and without (right) fireflies

Mimic Enemy

Last year, whilst working on Tomebound as a live client project, I prototyped a basic mimic enemy, which can be seen here. This enemy mirrors the selected player character's movement and attacks them when they collide. As we are now at the stage where levels are being put together, I needed to improve the functionality and visuals of this enemy. The initial version of the script focused on implementing the basic mimic behaviour, tracking player movement, and following a single-player character - to expand on this, I broke down the required upgrades into parts.

Player Tracking

The first task was to update the mimic's logic to track both player characters depending on which is selected. The Update method now checks the floor positions of both players using the PartyTracker script. If both players are on different floors to the mimic, the mimic transitions into its rock form, becoming inactive by toggling the mimicRockForm and mimicEnemyForm game objects within its prefab.

However, if the selected player is on the same floor as the mimic enemy, it will take its enemy form and proceed to mimic the movement of that character using the FollowPlayer function - updating in real time as the player switches between characters.

After some discussion, we also decided that we wanted the mimic to be present only on the second and third floors to allow the player to see the transition from rock to enemy form. To enforce this more easily for level designers, I added an editor function that prevents the game from being played if the mimic is on the first floor, with the script additionally now finding the player GameObjects automatically.

Mimic movement

Mirrored movement

Another element I added was mirrored movement, which allowed our level designer to set the axis they want the mimic to mirror, dependent on the direction they are facing. This required modifying the mimic's direction of movement based on the player’s last known position and adjusting it using horizontal or vertical mirroring. The script checks if the mimic should mirror the player’s movement either horizontally or vertically based on the mirrorHorizontally bool.

This ensures that the mimic’s movements are inverted relative to the player’s, creating more challenging levels where players must outmanoeuvre the mimic to progress. Additionally, to prevent the mimic from getting stuck or moving through obstacles, raycasting is used in the IsObstacleInDirection method to check for barriers in its path and stop the mimic from moving through them. This also allows obstacles to be built into the level design to guide or trap the mimic.

Animation

I also added a simple animation controller and used a blend tree to adjust animations dynamically based on the mimic's movement direction.

In the blend tree, I used Horizontal and Vertical parameters to control the mimic's movement animations. These parameters represent the direction and intensity of the mimic’s movement along the X and Z axes, respectively (Unity Technologies, 2024c). By updating these parameters in real-time, the blend tree transitions between different animation clips to match the mimic’s motion.

Mimic movement - Horizontal & vertical mirroring

Attack

Next, I added the mimic's attack ability. Using raycasting, the mimic detects whether a player is within attack range and triggers an attack animation and damage event through the attack method calling the players Health script to apply damage.

The mimic’s attacks also have a cooldown (hitInterval), allowing the player to move away, if not, attacking again after 3 seconds (Video of mimic attack in Health section below). This combat system forces players to be cautious around the mimic, adding tension to its encounters.

Death & Respawn

The death and respawn system was another element I reworked. The mimic now dies when it collides with water and respawns on collision with lava. However, these interactions also trigger animations and VFX - and trigger their own coroutines managing the relevant sequence.

The respawn coroutine initially delays the respawn and transitions back to its rock form. This was added in response to feedback stating the respawn was so quick that it looked like a glitch. The delay now ensures that when the mimic collides with lava, there is ample time for the animation to play, making it clear to players that the mimic is respawning. Additionally, I utilised the VFX tornado effect created by one of our game designers for the initial spawn of the mimic to signal the respawn event, enhancing the player’s feedback. I also added some rocks rotating around the mimic for polish.

With some extra time, I'd have liked to implement better animations and create VFX timed specifically for respawning. However, this is something that can easily be added later, as the core mechanics are now implemented.

Mimic death

Mimic respawn

Dynamic UI

One of the next systems I implemented was the dynamic UI. There were several main requirements for this system:

-

Player Selection

-

Updating character profile image and selected player indicator

-

Setting relevant movement indicators icons active based on directional movement available at the time

-

-

Responsive action and abilities icons based on which abilities and actions are available (drone, portal, grab, switch player etc.)

-

Update UI icons to reflect player input (keyboard and mouse or controller)

-

Floor indicators

-

Functioning health system with responsive onscreen UI & game over screen

The PCUI and ConsoleUI scripts hold the methods in control of toggling the relevant icons, with the GameManager controlling when these changes are called, based on the status of other mechanics used in the game. Within the GameManager script is also the control of the player outline indicators - I initially built these systems into the GameManager script as this was where a lot of the previous prototyping had placed these controls; however, on refactoring the game systems, much of this logic is to be separated into individual more modular scripts so as not to weigh down the GameManager with low-level detail.

Player Selection

One of the first things I tackled was updating the UI to reflect which player was selected. This involved toggling profile images, action buttons, and movement indicators to match the active character.

The SwapPlayer method handles switching between players and ensures that only the relevant UI elements for the active player are displayed. I used helper functions like SetActiveUIs to avoid duplicating code, which made the system easier to update later. However, movement indicators posed a challenge - both characters could sometimes activate multiple indicators simultaneously. To fix this, I changed the prioritisation in the UpdateMovementIndicators method so that only the relevant indicators would appear at any given time.

Updating Movement & Ability Indicators

I used a separate method to control the state of various indicators, toggling their visibility based on the characters' grabbing states, ability usage and movement constraints. These need to be updated dynamically depending on what each player can do. Initially, the system wasn’t efficient - updating UI components constantly, even when nothing had changed. This caused some noticeable lag and performance bottlenecking, so I resolved this by adding flags to track the state of each component and only updating them when necessary. This simple change made the UI feel much more responsive and significantly reduced performance issues.

Player selected & associated movement restriction UI

Book in proximity/grabbable UI

Abilities - Drone usage UI

Abilities - Portal usage UI

Input Device Detection and UI Adjustments

A key feature of the UI system is its ability to dynamically adjust based on the player’s input method, transitioning between PC and console UI layouts. To achieve this, I implemented the CheckForControllers coroutine, which periodically monitors the connected input devices. When a change is detected - such as plugging in or disconnecting a gamepad - the system updates the UI to match the active input method.

One of the early challenges with this system was desynchronisation between input detection and UI updates. Sometimes, the wrong UI would remain displayed despite a device change. I resolved this by directly linking the input detection logic to the UI update calls, ensuring immediate synchronisation whenever a device change occurred. The coroutine-based approach ensures minimal performance impact, as the checks are spread out over time rather than constantly running.

This feature has been particularly helpful during gameplay transitions, where players may switch from a keyboard and mouse setup to a controller mid-session. By utilising Unity’s Input System (Unity Technologies, 2024d), I was able to implement this functionality while focusing on real-time responsiveness and stability.

Automatic switching of UI on connection of gamepad

Health system and UI

The health system UI was another important gameplay element I worked on. While the underlying health mechanics were already in place, I refined the system to ensure it worked seamlessly within the live game environment. The health UI features heart icons that update dynamically as players take damage, providing immediate feedback on their health status.

To enhance the system further, I implemented a game-over screen that activates when a player’s health is fully depleted. This required modifying the enemy scripts to ensure they properly triggered damage events and integrating these events with the UI.

Health UI - Mimic attack

Floor Indicator

The floor indicator system, managed by the UpdateFloorUI method, ensures that players can easily see which floor they are currently on. This method starts by validating the presence of a controlling object and logging its name for debugging purposes. It then retrieves the BaseObjectData component containing the floorID and uses this value to determine which floor indicator to activate.

At the start of the method, both PC and console UI components are reset to clear any previously active indicators. A switch statement is then used to activate the appropriate indicator for the current floor, and the same logic is applied to the console-specific UI if a gamepad is connected. Debug warnings are triggered for invalid floor IDs, making identifying and resolving potential data issues during development easier.

One of the more challenging aspects of this feature was ensuring consistency between the PC and console UIs. Debug logging played a key role here, helping to identify and fix synchronisation issues. The final implementation ensures that floor indicators update accurately and remain consistent across both input methods.

Throughout the development of the dynamic UI system, I encountered several unexpected challenges, however these experiences taught me the importance of modular design, performance optimisation, and clear debugging practices. By breaking down the UI system into smaller, focused methods and optimising update logic, I was able to create a dynamic and responsive interface that improves the overall gameplay experience.

Player Animation

Another feature I completed was enabling both player characters to animate simultaneously while carrying the book. On handover, only one player could animate at a time, and the grab and walk animation also did not work as expected. Starting from scratch, I created the PlayerAnimationManager with the central functionality implemented in the Update method. This dynamically handles animation states based on game conditions - if both players are grabbing the book, indicated by a bool in GameManager, the HandleBothPlayersMovement method ensures that the player not in control, mimics the controlling player’s movement by retrieving its direction from the GameManager; and updating the passenger's Animator parameters accordingly, simulating it's movement. When moving one player character, the HandleIndividualPlayerMovement method sets animation parameters based on each player’s specific movement, retrieved from its GridBasedMovement1 script.

A key feature is the layer mask based animation blending (Unity Technologies, 2024e), which ensures smooth transitions between upper and lower body animations, which is something I learnt to use for the first time during this task. For example, grabbing an object increases the upper body layer’s weight while maintaining lower body movement, using Unity's animation layers and Mathf.Lerp for gradual transitions, allowing me to utilise separate parts of two different animations and combine them. Which in this case allows for grabbing to occur on the top half separately to walking status on the bottom half.

The script also incorporates idle detection by tracking movement through a timer to prevent the animations from flicking back and forth from walking to idle repeatedly when in use with the grid-based system. If no movement occurs within a set threshold, the animation parameters reset to idle, ensuring players smoothly transition between poses.

Before - Single player animating & broken animations

After - Both players animating with layer masks

In addition to the systems above, I contributed to several other features, including but not limited to:

-

Fixing tome rotation to ensure correct alignment on play, and updating directional book indicators

-

Lava Launcher enemy implementation - Bezier curve, damage, launch and respawn delays added

-

Implementing a debug mode allowing level selection for testing

-

Updating all menu inputs to work with the new Unity Input System and menu navigation

-

Adding object fader for obstacles obscuring players (later replaced by an outline system)

-

Collaborated on the overworld and save system, implementing it from a separate package after another developer built it

-

Implemented assets and VFX/particle systems created by our 3D artists and game designers

-

Polished completed levels & scene management

-

Master Systems prefab creation

-

Final build settings management

-

Level progression and restart

Implemented overworld and save system

Object fader on obstacle block

03

Final word

Working with RaRa Games as a Producer and Lead Game Developer this semester has been incredibly rewarding, resulting in 12 fully playable levels. This role has allowed me to enhance my technical skills in modular coding, animation blending, and optimisation, while also improving my production management abilities. Additionally, collaborating with multidisciplinary teams enhanced my ability to integrate art assets with technical systems, ultimately streamlining the production pipeline.

The results of my production methods and processes were evident in the measurable increase in productivity. Over the 10-week period, the team implemented a total of 242 changes, compared to just 45 changes checked in during the 10 months prior to my taking on production. This marked a fivefold increase in the pace of development, with 52 changes alone being pushed on Wednesdays to meet sprint meeting deadlines, while the rest were deployed on an ad hoc basis as features were completed. These statistics demonstrate the effectiveness of the structured workflows and adaptive production strategies I introduced.

Though this wasn't a traditional placement, the experience has been invaluable, and I look forward to continuing my journey with RaRa Games to help bring Tomebound to release.

References

Atlassian (2024) Scrumban: Mastering Two Agile Methodologies. Atlassian. Available at: https://www.atlassian.com/agile/project-management/scrumban. [Accessed 9 November 2024]. Martins, J. (2024) What Is Kanban? A Beginner’s Guide for Agile Teams [2023] • Asana. Asana. Available at: https://asana.com/resources/what-is-kanban. [Accessed 11 December 2024]. Scrum.org (2024) What Is Scrum? Scrum.org. Available at: https://www.scrum.org/resources/what-scrum-module. [Accessed 11 December 2024]. United Nations (2024) Goal 12 | Ensure Sustainable Consumption and Production Patterns. United Nations. Available at: https://sdgs.un.org/goals/goal12. [Accessed 9 November 2024]. Unity Technologies (2019) Unity - Scripting API: Physics.Raycast. Unity3d.com. Available at: https://docs.unity3d.com/ScriptReference/Physics.Raycast.html. [Accessed 11 December 2024]. Unity Technologies (2024a) Unity - Scripting API: OverlapBoxCommand. Unity3d.com. Available at: https://docs.unity3d.com/ScriptReference/OverlapBoxCommand.html. [Accessed 11 December 2024]. Unity Technologies (2024b) Unity - Manual: Visual Effect Graph. Unity3d.com. Available at: https://docs.unity3d.com/Manual/VFXGraph.html. [Accessed 12 December 2024]. Unity Technologies (2024c) Unity - Manual: Direct blending. Unity3d.com. Available at: https://docs.unity3d.com/Manual/BlendTree-DirectBlending.html. [Accessed 11 December 2024]. Unity Technologies (2024d) Input System | Input System | 1.11.1. Unity3d.com. Available at: https://docs.unity3d.com/Packages/com.unity.inputsystem@1.11/manual/index.html. [Accessed 11 December 2024]. Unity Technologies (2024e) Unity - Scripting API: LayerMask. Unity3d.com. Available at: https://docs.unity3d.com/6000.0/Documentation/ScriptReference/LayerMask.html. [Accessed 11 December 2024]. Unity Technologies (n.d.) Unity - Scripting API: Mathf. Docs.unity3d.com. Available at: https://docs.unity3d.com/ScriptReference/Mathf.html. [Accessed 11 December 2024].